During ‘Tesla Autonomy Day’ Tesla had unloaded a lot of detailed information about their future autonomous plans on the both the hardware and software side of things. Here is everything we learned during ‘Tesla Autonomy Day’.

Tesla Full Self-Driving Computer

Both owners and fans of Tesla have been waiting on the mythical Hardware 3 that would be able to deliver Full Self Driving. To start off the presentation, Elon Musk had brought Peter Bannon on to the stage. Bannon had previously worked at Apple working on their successful A-series chips before coming over to Tesla in 2016.

Now Bannon goes really in depth about the chip design, and I mean really into it. If you do no have a Computer Science degree or a fascination with board design, then 90% of what Bannon said will be lost on you. Which is not to say that it is a bad thing, those that know about computer hardware will be able to appreciate and evaluate if such a chip design can support Tesla’s autonomy goals. But given the dense subject matter I will try to highlight all the key points.

Bannon states that the new chip was built from the ground up as there was nothing on the market that would be able to take advantage of Tesla’ neural net. Musk states that this design has been finalized about 1.5-2 years ago and that they are already working on the next version.

The new hardware was placed in every Model S/X produced in March, and they started producing Model 3s with the new computer 10 days prior to the event.

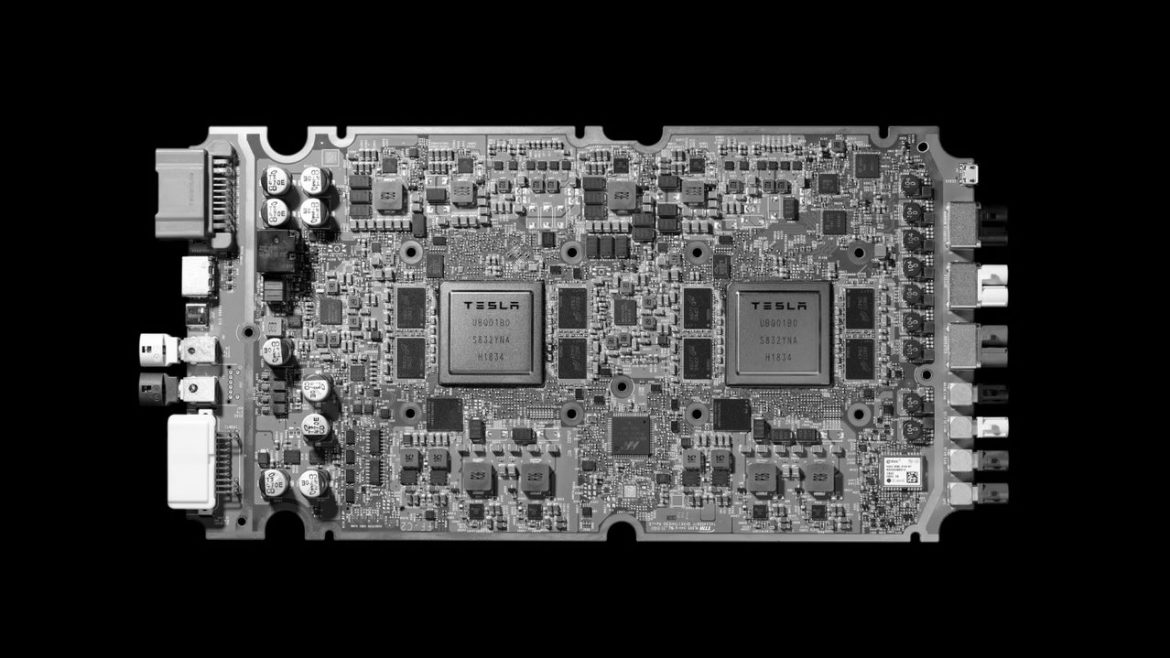

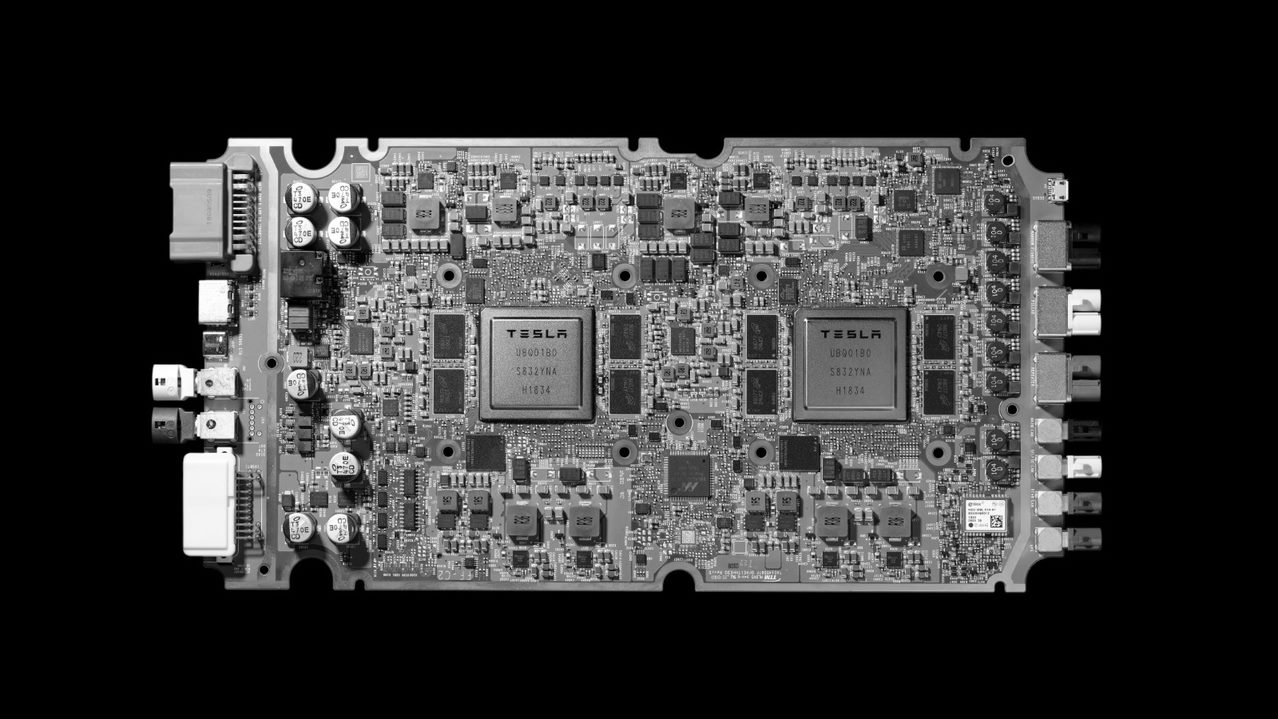

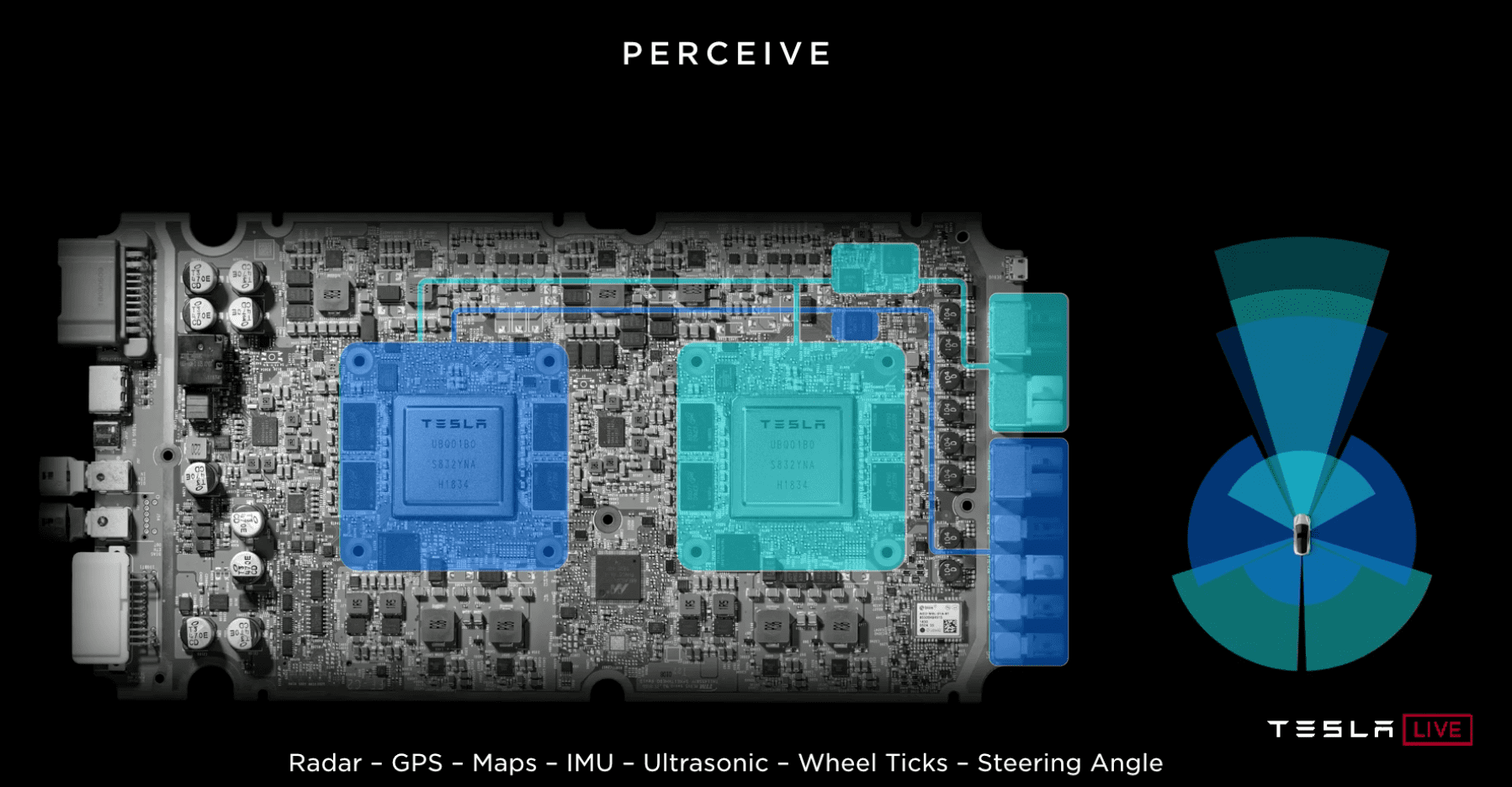

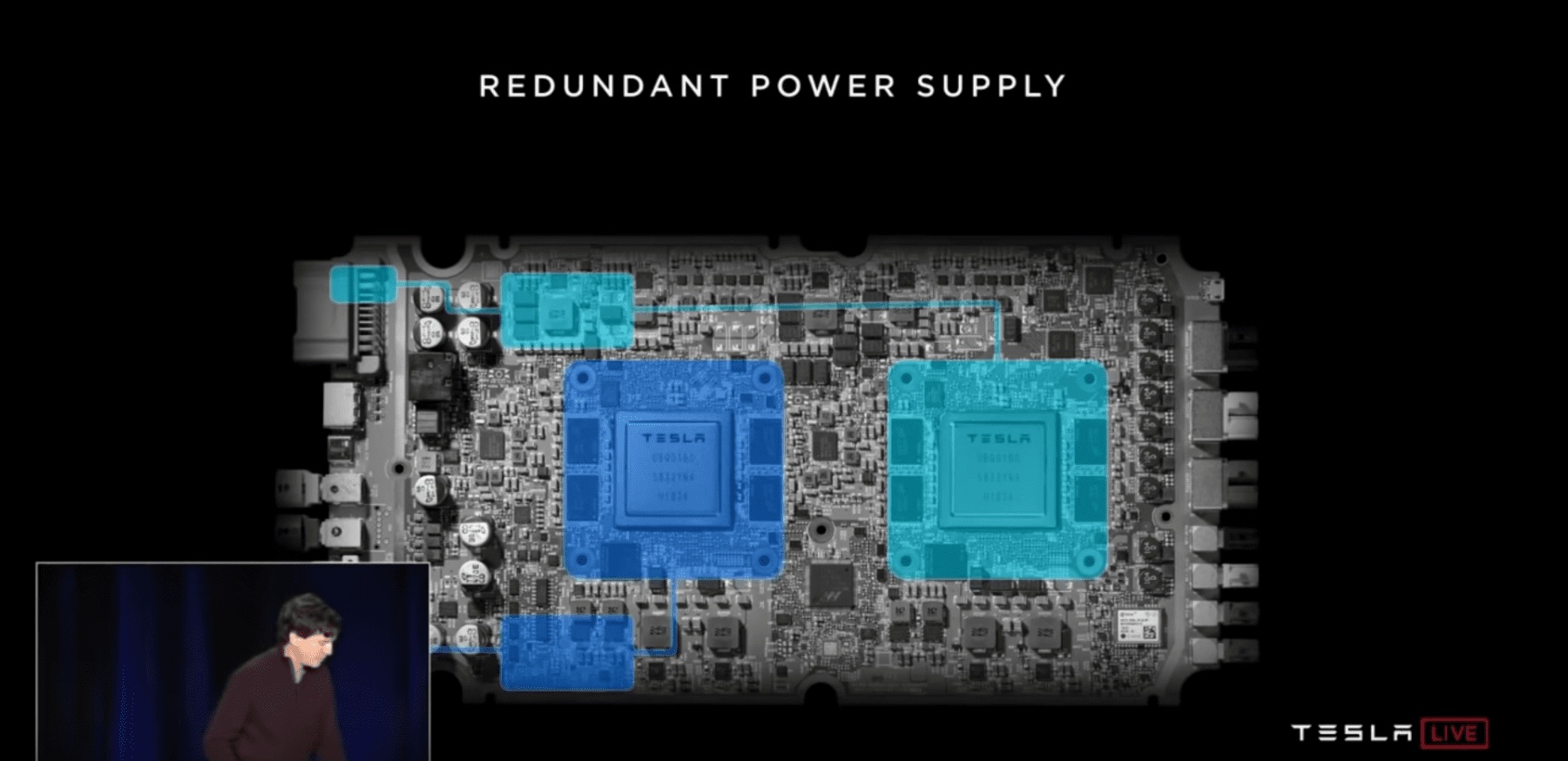

The new board was showed off which features two chips placed prominently side by side. Additionally, the Hardware 3 does not change its overall shape compared to Hardware 2.5 in order to be able retrofitted in the old vehicles.

The two-chip design is very important in keeping Full Self Driving safe. Each chip will perform their own individual calculations based on the information from the various sensors. Before any action will be implemented (i.e. turning the steering wheel), the two processors will compare their course of actions. If they are the same, then the car will proceed with the action. However, if the two chips disagree, then the car will not proceed with the action and will try again.

On top of the safety from a decision point of view, the system is also completely redundant. According to Musk, “any part of this could fail and the car keeps driving”. The system is essentially split in half, with each processor and half the cameras running off one power supply, and the other chip and half of the cameras running off a different power source.

Bannon goes on to go deeply into the design of the chip. He does point out that the chip used in Hardware 3 is not the most powerful chip in the world. He cites that it is larger than one found in your cell phone, but is smaller than one found in a high-end GPU. Given that this chip is purpose built for Tesla’s specifications, it doesn’t have to be overpowered and expensive.

For those that understand, here is a rundown of the specifications. If you are really interested in the nitty-gritty, then I suggest you watch the video, as I am unable to fully deliver the technical aspects in great enough detail.

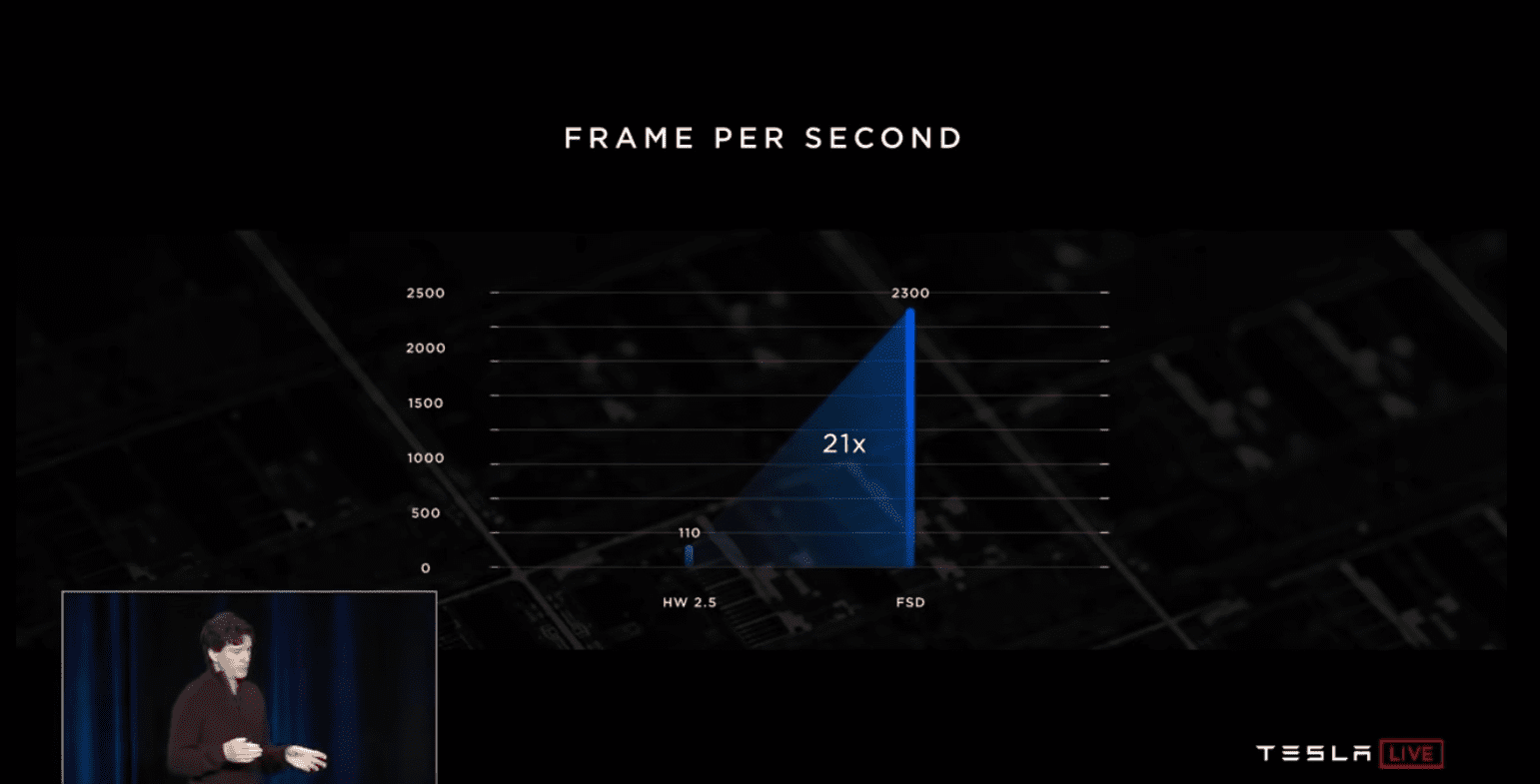

Now undoubtedly the next slide will be most important and easy to understand for us. The new computer will be able to process 2300 frames of information per second. This represents an enormous 21-fold increase to Hardware 2.5 which only had 110 frames per second. With more frames of information, the more information the computer will have, and thus lead to better decisions on the road.

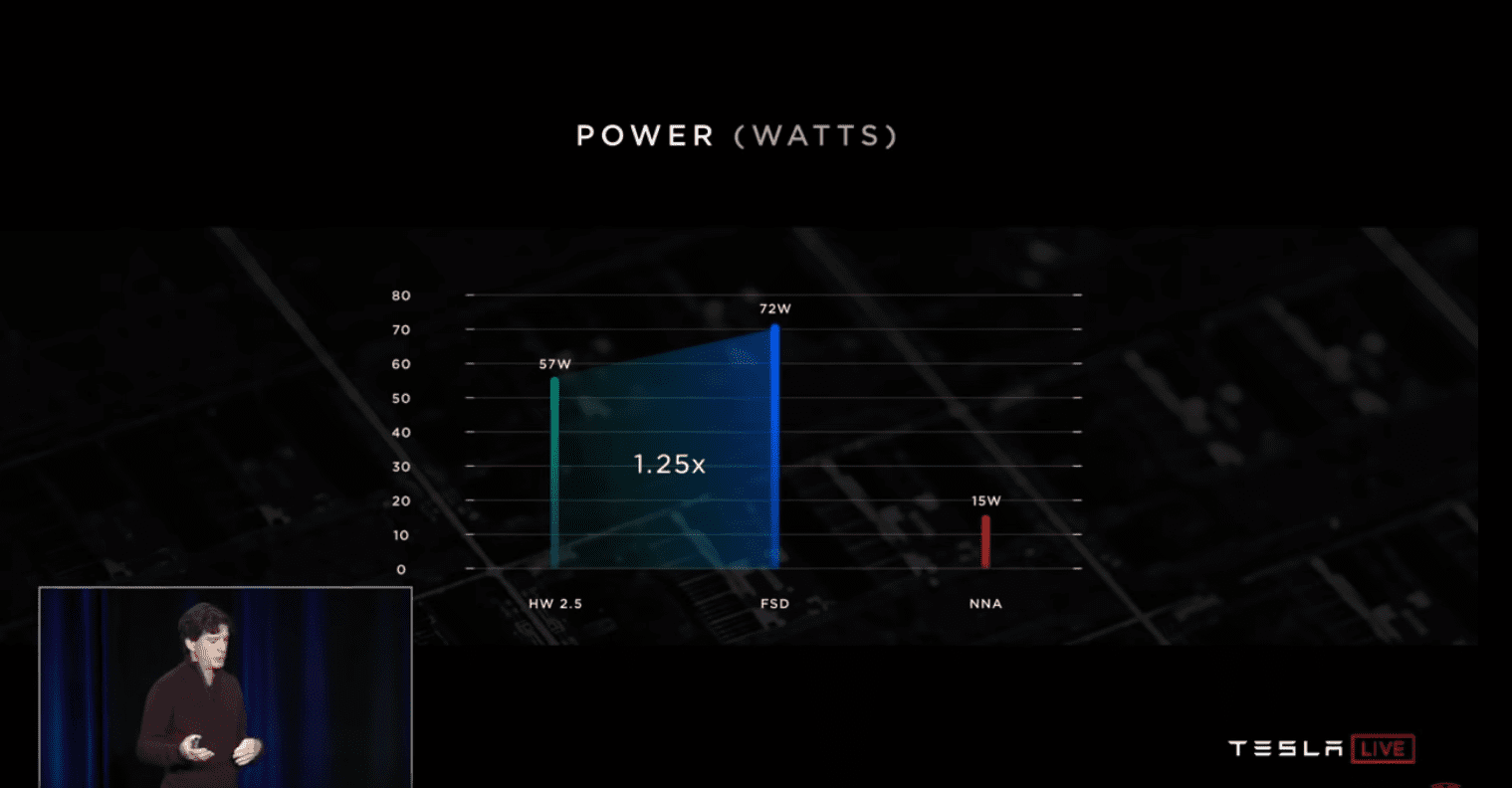

Fortunately, this massive increase in processing power did no result in the much higher of demand in power consumption. Given that this computer will have to be able to retrofitted in cars that had power supply demands for Hardware 2.5, that is a great success. The new hardware only uses 1.25 times the power of Hardware 2.5. The slightly increased power use will marginally affect the range of your Tesla and will do more so in city driving.

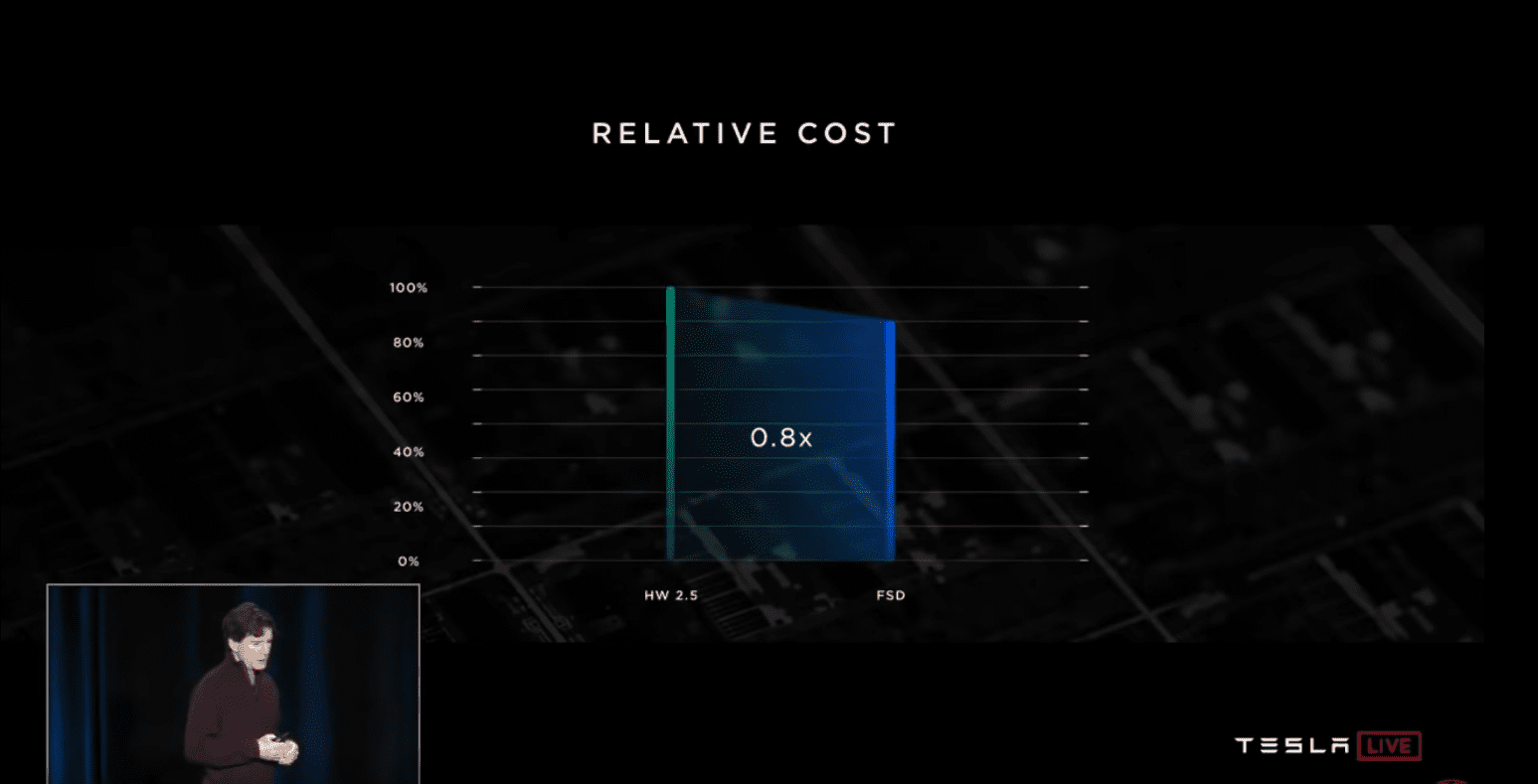

Not only that, but Hardware 3.0 is 20% cheaper to manufacture than Hardware 2.5.

Bannon closes off his hardware discussion with a comparison to Nvidia’s current self-driving computer solution, the Drive AGX Xavier. The compared the Trillion of Operations (TOPs) metric between the Xavier and Tesla’s new Full-Self Driving Chip. The comparison was heavily in Tesla’s favor as the new chip can do 144 TOPS while the Xavier can do 30 TOPs (written as 21 TOPs on the slide in error).

This did not sit well with Nvidia who promptly released a blog post that thought Tesla should compare their new chip to Nvidia’s more powerful Pegasus chip which is designed for full self-driving.

“It’s not useful to compare the performance of Tesla’s two-chip Full Self Driving computer against NVIDIA’s single-chip driver assistance system. Tesla’s two-chip FSD computer at 144 TOPs would compare against the NVIDIA DRIVE AGX Pegasus computer which runs at 320 TOPS for AI perception, localization and path planning.”

However, Nvidia failed to mention that the Pegasus required 500 watts of power compared to Tesla’s 72 watts. While the 1.25x in additional power Hardware 3 demands might not affect your range too much, an over five times requirement might. Had Tesla been able to command a power draw of over five times its current design, who knows how much more powerful it would have been.

Actually, we won’t have to wait long as Musk stated that the next version of the chip is already in the works and will be out in around two years. It will also be three times better. Which does raise the question of, what is there to improve on? If Hardware 3 is capable of delivering full Level 5 Autonomy, then how do you improve upon that? Will the current computer still not be enough? As always, we will have to wait and see.

In case you were wondering, Musk still is not a fan of LiDAR. “LiDAR is a fool’s errand, and anyone who relies on LiDAR is doomed. It’s like having expensive appendices. You’ll see.”

So, continue to not expect any future Teslas to have LiDAR.

Musk, who is very proud of the new chip states that,

“I think if somebody started today and they were really good, they might have something like what we have today in 3 years, but in 2 years, ours will be 3 times better. … A year from now, we’ll have over a million cars with Full Self Driving computer, hardware, everything.”

Tesla Full Self-Driving Software

Continuing forward with ‘Tesla Autonomy Day’, Elon Musk brought out Andrej Karpathy who is in charge of the software side of things for Tesla’s Full Self-Driving. Karpathy’s section was easier to follow, but it does not make it any less impressive or important to Tesla achieving Full Self-Driving. In fact, it is probably more important than the hardware side.

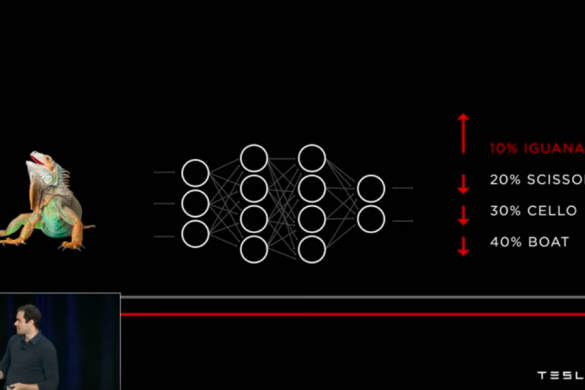

Karpathy starts off by explaining the basic principle of how you would train a neural network. In extremely simple terms, a neural network is computer system that tries to mimic the structure of our brains to make decisions.

He describes where a quick example where an image of an iguana will be shown to a neural network. At first it might only give a 10% chance of getting it right and identifying it as an iguana. After letting the computer know that it is an iguana, the chance of the computer identifying it as an iguana will increase. After feeding it thousands of different images of iguanas, the computer will eventually be able to recognize an iguana effectively.

So how does this work in the context of Full Self-Driving?

To teach the car about lane marking, Karpathy and his team will take an image from a Tesla, and physically annotate the lane markings. They will then ask the network to provide similar images of a Tesla driving forward on a street/highway. Then those random distribution of pictures will have their lane marking annotated as well.

This eventually teaches the network what lane markings look like and how to treat them.

However, the network doesn’t have human intuition. At this hypothetical point in time it thinks that lane markings only go straight. So, when presented with a curve it does not compute that the lane can curve. As such, the team has to find examples of a curved road and annotate those curved lane markings.

As you can imagine, doing this for every important aspect on the road (vehicles, lane markings, stop signs, traffic lights, etc.) is very tedious and time consuming. In addition, it is very important that It is taught these things in different lighting and weather conditions.

Karpathy goes on to talk about Tesla’s use of simulations and confirms that it is used extensively for developing and evaluating the software. However, simulations do not compare to real life data when it comes to the sheer randomness and variety that a simulator doesn’t provide.

In Karpathy’s words, the three things that are essential for a neural network are: a large dataset, varied dataset, and real dataset. Luckily due to Tesla’s hundred of thousands of cars driving all over the world, you have a large, varied, and real dataset.

Karpathy explains that they taught the network to detect a bicycle on the back of a car as one entity rather than a bicycle and a car independent. This was done in the same as with the lane markings. They would ask for images of vehicles that had bicycles on the back and annotated them appropriately until the network understood.

Tesla’s Shadow Mode is used extensively to help train the neural network. For those that do not know. Shadow Mode runs autopilot/Full Self-Driving in the background and makes calculations on what it would do while you’re on your daily commute.

Not only is it now validating its decisions, it is also capturing data to help the neural network. Karpathy described ‘Path Prediction’ where the neural network will collect data on how you drive on a certain road. As seen in a video example, a Tesla could predict the path of a road behind a hill that it otherwise cannot see. Tesla can then compare its Full Self-Driving cars against drivers that they have recorded.

The presentation further went on to once again touch on that Tesla does not need LiDAR to achieve Full Self-Driving.

Very briefly, LiDAR stands for Light Detection and Ranging. It is a sensing method that shoots out a multitude of lasers and measures how long it takes for them to bounce back, creating a 3-D space based on how long each laser took to get back. It is prominently used in satellite image to map out topography. Currently many autonomy companies such as Waymo believe that Full Self-Driving will require LiDAR to work. As you have read many quotes from Musk at this point, he disagrees with this notion.

Karpathy shows a six-second demo of a Tesla driving down the street. He then shows that from those six-seconds, they were able to create a 3-D representation of the environment around the car, similar to what you find in a LiDAR system.

To teach the camera system depth, Tesla uses the forward-facing radar to validate how far away the neural network thinks an object is.

Finally, Karparthy criticism that LiDAR does not give enough information compared to what you would get from a camera system. As an example, LiDAR would have trouble differentiating a big plastic bag rolling through the road from a tire. Nor would it be able to read a construction sign or be able to tell what direction a pedestrian might be looking.

With that Karparthy ended his portion of the presentation. It’s very cool to see the extensive work that Tesla has to do get Full-Self Driving to work on camera system.

Tesla Robotaxi

The last person to take the stage during ‘Tesla Autonomy Day’ was Elon Musk who discussed Tesla’s Robotaxi plan. While going through the ‘Master Plan’ Musk said that he was confident that the Tesla Robotaxis will start getting regulatory approval to be driven without drivers next year.

The Tesla Network, as it’s called, will work in a similar manner to AirBnB and Uber. Tesla owners can share their cars when they want to. The owner can further restrict the times that the car would be in use and what people can use it (friends, coworkers, social media friends, etc.). Tesla will then take 25-30% of the revenue. Additionally, in place where there are not enough people sharing their cars, Tesla will have dedicated cars in place for the network.

The network will have an app very similar to what you would see on Uber or Lyft.

While all Full Self-Driving Tesla’s are capable of being part of the Tesla Network, the Model 3 will be the first to be eligible. Musk additionally stated that as time goes on, that they will just start getting rid of parts that aren’t needed, such as the steering wheel and pedals. In as little as 2-3 years he sees them building Robotaxis without items such as steering wheels. This according to him will also lower the price down to as low as $25,000.

Musk then went into the economics of throwing your Tesla into the Tesla Network.

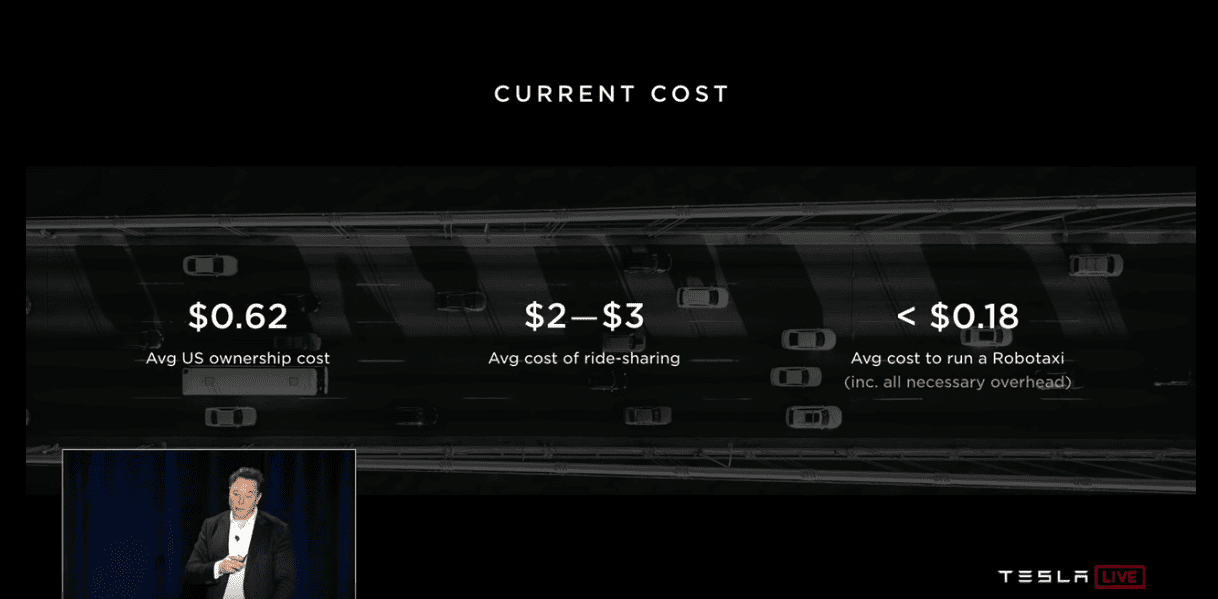

He notes that according to AAA the average cost of ownership of a gasoline car is roughly $0.62 per mile. For a Robotaxi it would be less than $0.18.

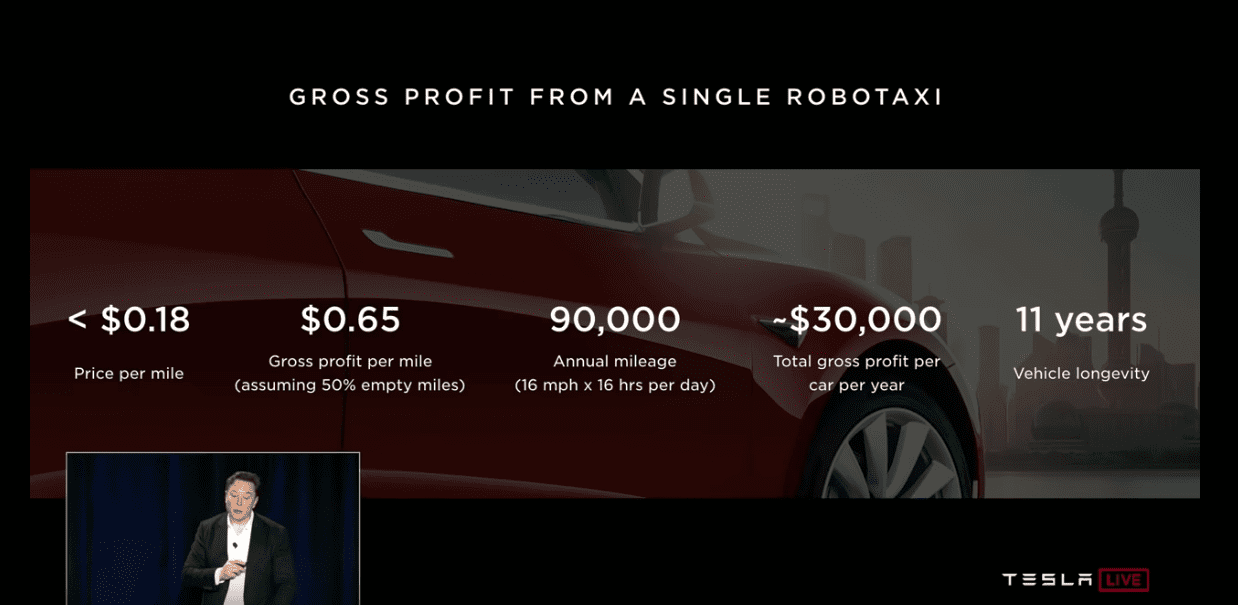

Then assuming you have your Tesla drive for 16 hours every day at 16 mph, you can accrue 90,000 miles annually. This will net you around ~$30,000 a year, meaning that future $25,000 Robotaxi could pay itself off in one year. Given that Tesla is now promising that their cars will be good for 1 million miles worth of use, this gives you 10-11 years of use. Meaning you could potentially make $300,000-$330,000 over the lifetime of the car. Hence why Musk claims that your Tesla is an appreciating asset.

By mid-2020, Tesla will have over a million cars with Full Self-Driving capabilities that will be eligible to join the Tesla Network.

Tesla Full Self-Driving Video

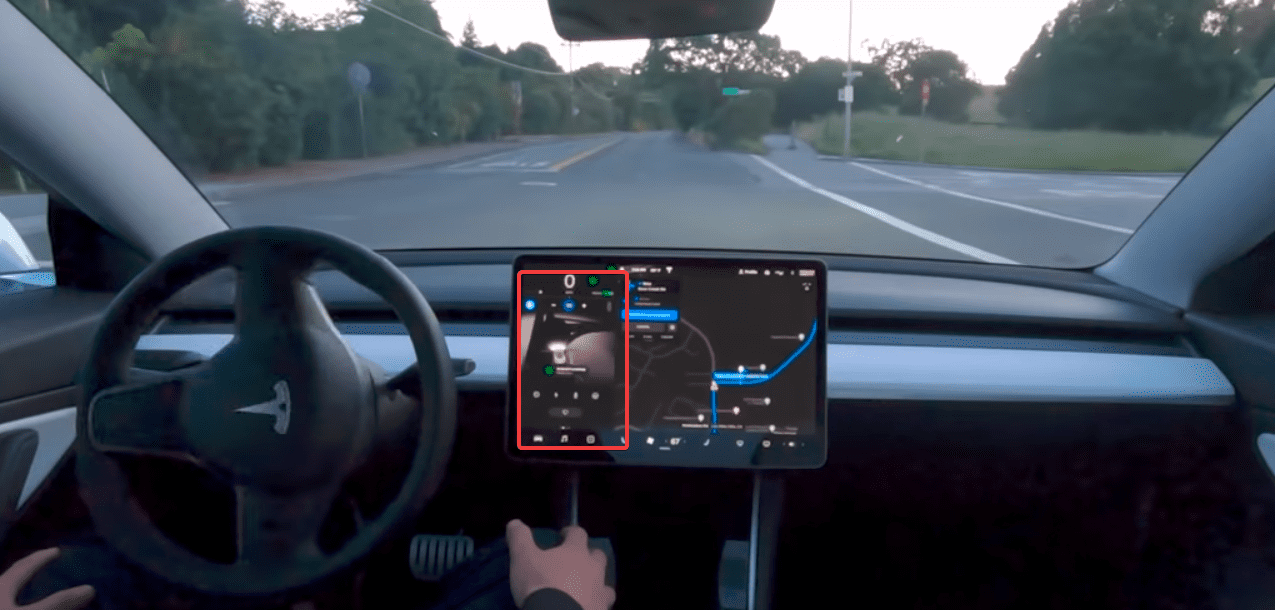

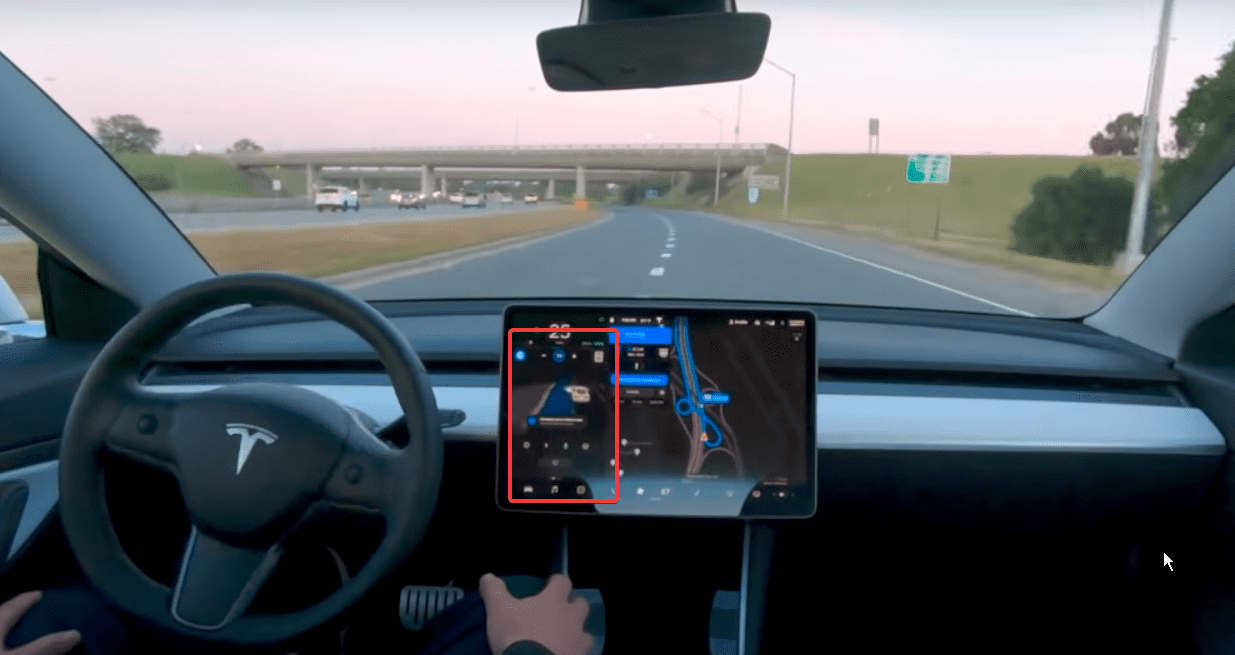

But we could not finish ‘Tesla Autonomy Day’ without seeing some Full Self-Driving in action. Thankfully Tesla released a two-minute sped up clip of a Model 3 driving through roads autonomously. As you will notice, it can stop at intersections and doesn’t require the driver to apply pressure to the steering wheel.

While the display does not look to different from the current Autopilot setup, we do notice a couple new “camera angles” for the car when it is at intersections or changing lanes that are not present in the current autopilot system.

Overall ‘Tesla Autonomy Day’ presented some very information for us, both on the hardware and software side of Full Self-Driving. The demo that Tesla showed was very cool and it seems like they are making some very significant progress towards Full Self-Driving. As always, Musk is promising the world in a very short amount of time. We’ll have to wait and see if Tesla can meet the expectations.

What do you guys think? Let us know down in the comments below.