Lucky for us, Autonomous driving is experiencing a co-evolution alongside zero-emissions technologies leading to a very rapidly changing automotive market. For now, electric cars and autonomous vehicles seemingly go hand in hand with one another as part of our tech-forward future landscape. Looking to modify the very way we drive (or get driven in) our vehicles, companies are racing to create the next industry standard for both.

While many can argue that Tesla has won the EV space with an electric car that is feasible and desirable, we are far from having perfected autonomous driving features. With multiple routes being taken and an endless list of companies at play, one seems to see things a bit differently. Light, a self labeled depth perception technology company, intends to undermine the importance of both LIDAR and Radar in low level autonomy with only a couple of cameras.

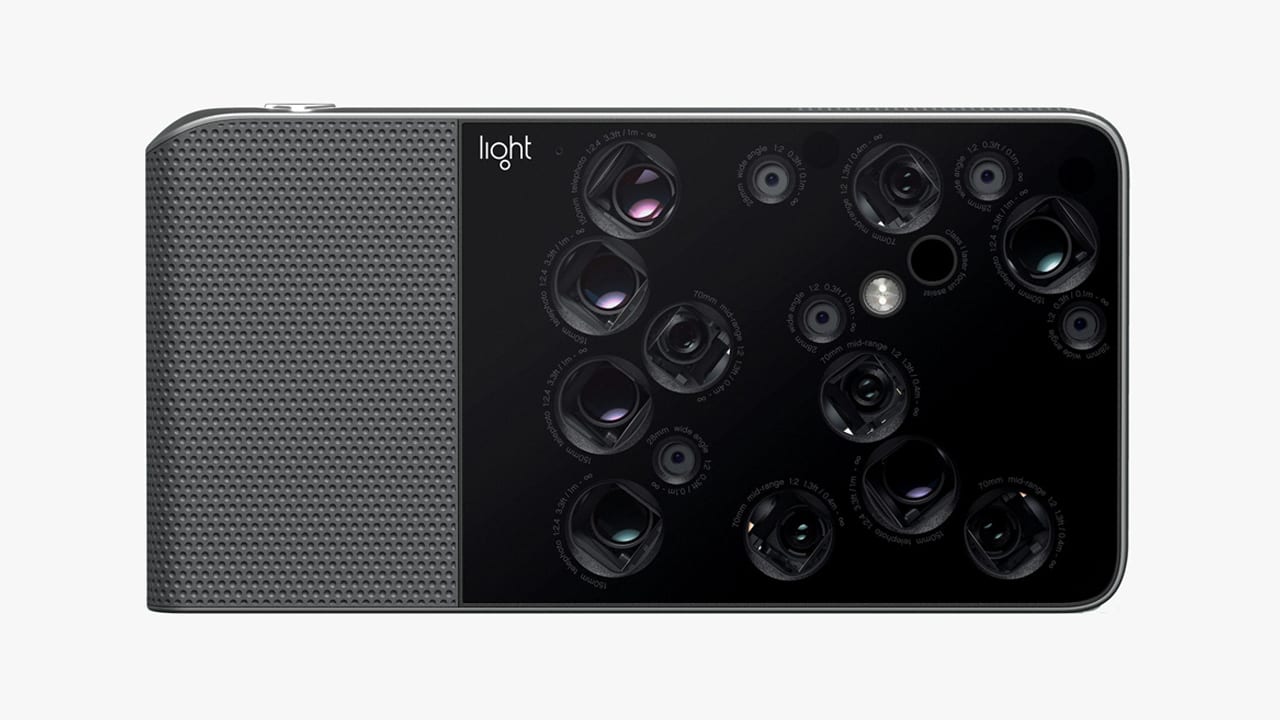

As a company, Light did not start off in the autonomous driving realm. The California-based company first came on to the scene with their rather wacky looking L16 camera in 2015. The frankensteined pocket camera came with 16 different lenses scattered throughout its back. Through software the camera is able to simulate pictures taken in a wide range of focal lengths and allows for different depth of fields to be captured at once. Due to the sheer number of lenses, it also looked to provide very good low-light performance. The camera came out to mixed reviews, but with a rather high price of about $2,000 it didn’t endear itself to many consumers. Still, while reviewers called results iffy, the technology as a whole drew attention to the company.

Through a $121 million investment round led by Softbank, the company would continue to stay in the tech game. Light went on to help build the camera of the Nokia 9 Pureview. Once again, the company made their atypical design known. This time instead of 16 lenses, there were only five. The company also secured a partnership with Sony to work on other smartphone cameras. However, somewhere along the lines the team decided to apply their knowledge and technology in a slightly different direction.

With their experience in depth-processing utilizing only camera lenses, the team went on to try their hand at creating the tools necessary for an advanced driver assistance system (ADAS) capable of achieving a high level of autonomy without the need for expensive LIDAR. LIDAR is considered by many companies (sans Tesla) as the focal point in making autonomous systems work. However, even with it steadily coming down in price, it stands as the most expensive sensor in many ADAS packages.

Lights CEO, Dave Grannan, was kind enough to sit down for some questions and walk us through a short demo:

Clarity

With no explanation needed, Light is calling their ADAS ‘Clarity’. From a hardware standpoint, the system is very simple utilizing only two industry standard cameras and Light’s chip for minimum function. To explain the system, the company makes numerous comparisons to human eyes. Us humans are able to instinctively tell the rough distance of objects in our vicinity at ease. Thanks to our eyes being spaced apart, they see objects at slightly different angles, presenting a parallax to our brain that works out an approximate distance. Of course in this analogy, the two cameras on board Light’s system are our two eyes, and the silicon chip is our brain. But instead of rough estimations, the system will be able to give precision measurements down to the centimeter thanks to proprietary software.

Clarity does not rely on any special types of cameras, instead, most of the magic is found in the software. With automotive-grade cameras being more and more abundant, it makes the system a more affordable option for any company looking to get anywhere from the most basic of features, all the way to full autonomy. With modular software companies can add more cameras, radars, a LIDAR system, or even a neural net to achieve the exact level of autonomy fit for their customers needs. Clarity claims itself as the missing piece needed to achieve level four or five autonomous driving but can also be used to drastically improve everything in between.

“For true level 4/level 5 autonomy, we would envision a Light Clarity solution that is 360 degrees around the vehicle, LIDAR covering the same range, and then radar probably in the forward-facing direction…”

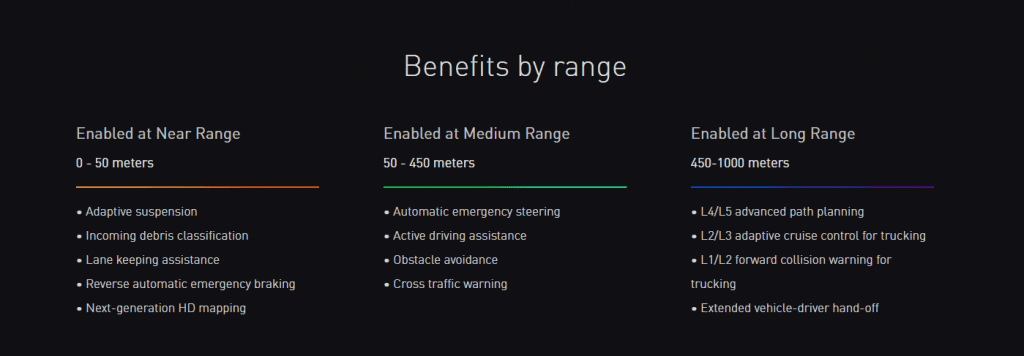

The company touts a major benefit of using cameras is their range of about 1000 meters, much further than the 250 meters that is found in automotive LIDAR systems. This allows for more advanced route planning and gives the car important data much sooner. The company has listed some of the features available in the different visual ranges of the system.

In order to better understand the system, it’s costs and benefits, we need to take a look at the current industry standards.

LIDAR

At this point, LIDAR is a decades old technology with its use in automobiles also significantly predating our current crop of autonomous cars. One of the first uses of the technology dates back to the early ’90s when Mitsubishi used the tech to manage a warning system notifying drivers of close objects. Of course, the use in autonomous cars is more robust than just warning systems.

On a basic level, LIDAR works by projecting a laser onto the environment and measuring how long it takes to bounce back to the sensor. The faster a laser beam is bounced back, the closer an object is in that particular area. The more beams the system can send out to cover the area in the question, the more accurate the map of the area will be. This is where consumer friendly costs quickly separate from commercial grade satellites.

The system has proven useful in numerous applications in the realm of surveying, a multitude of scientific disciplines, and the military. Now many autonomous tech companies are sticking with LIDAR being an irreplaceable portion of their sensor system. Most recently, we saw the use of LIDAR in the Lucid Air’s autonomy package. While the system does provide precise measurements, it isn’t without its drawbacks.

Pros |

Cons |

||

|

High Precision |

Limited range and resolution |

||

|

|

Expensive |

||

LIDAR has been said to have some difficulties when it comes to inclement weather that could interfere with how the laser beams travel through the air. Additionally, depending on how advanced the LIDAR is, it may not output the entire scene in front of the car, leaving some gaps in the measurements. The system doesn’t have a visual light aspect to it, which means that LIDAR on its own would not be able to read a stop sign or be able to determine if a traffic light is red, yellow, or green. As such LIDARs are still paired with regular cameras to combine the data together.

Lastly is the cost. Elon Musk has famously turned away from LIDAR when starting Tesla’s full self-driving system, citing that it was too expensive. The LIDAR remains the most expensive part of any companies sensors, and so it limits the affordability of many self-driving systems as a whole. With a space grade LIDAR system full self-driving vehicles are easy. The race isn’t towards increasingly better autonomy rather consumer friendly and feasible pricing with those simultaneous improvements.

Radar

Radar is an older technology that serves as the foundation for the LIDAR (as the name is a combination of ‘radar’ and ‘laser’). Radars have also found their way into cars over the years, prior to the autonomous movement. Once again their use was for determining the distance of objects around the car to help with any early warning systems of other ADAS features. Think backup sensors and forward collision warnings.

Pros |

Cons |

||

|

Cheap |

Limited Range |

||

|

Not impeded by poor weather |

Not good at telling small objects apart |

||

The principle of radar is just as simple as LIDAR. Instead of laser beams, the system outputs radio waves and detects how long it takes for them to make their way back. The faster the return, the closer the object. Thanks to the lack of a need for visuals, radars work well whenever visibility is low, such as in fogs or heavy rain. The resolution of the data and difficulties detecting small-sized objects makes radar on its own insufficient for any serious attempts at autonomy. However, it is still incorporated as a standard in every autonomous system to help during poor visibility and to act as a redundancy for any sort of measurements done within closer ranges.

Monocular

As the name implies, a monocular system involves a camera system in which there is either one camera used or multiple cameras that are not adequately spaced apart enough for the system to make use of stereopsis.

The system still allows for greater resolution when compared to LIDAR and allows for identification of things such as stop signs or which light on a traffic light is illuminated, but Light claims that it is limited in its depth perception capability when compared to the Clarity system. One could quickly test out the difference for themselves by covering up one of their eyes and noticing that while the depth of objects is still present, it isn’t as precise as having use of both eyes.

Pros |

Cons |

||

|

Cheap |

Reliance on machine learning data to work well |

||

|

Good Resolution |

|

||

To help a monocular system be sufficient enough to work on autonomous cars, the objects within view are tagged (i.e. a car, a person, a traffic cone) to give the system an idea of what the rough dimensions of such an object should be, which allows the system to refine its measurements even without the benefit of parallax. This tagging system of course relies on feeding an AI tons of data to train it on its ability to recognize various objects and situations. Due to this, the effectiveness of the system is almost directly correlated to the amount of data that can be obtained to train the machine. Even with machine learning an an adequate neural network, Tesla has proven it difficult to perfect, even with billions of miles of data.

Clarity vs LIDAR

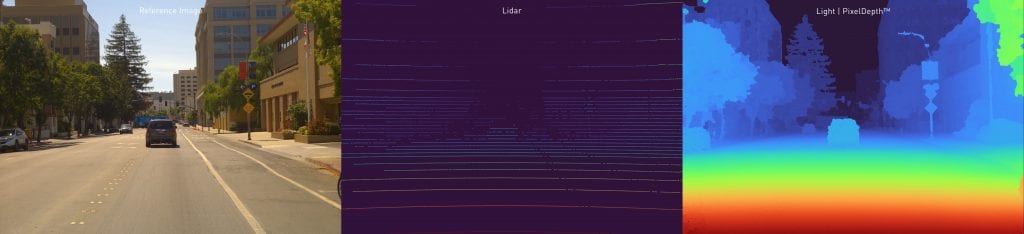

Clarity is looking to prove that LIDAR is not the be-all-end-all if you want to have a high level of autonomous driving in the future. As we’ve said before, Light believes that their imaging system will provide unparalleled resolution and range, all while coming in at a lower cost to help make autonomous driving a more accessible feature in the future. To give a better understanding of the differences between the two systems, Light provided the following comparison of the same scene outputting from an industry-standard LIDAR and their Clarity system.

In the example above you can see all the individual points that the LIDAR measured and returned. While you can get accurate measurements for a few hundred meters in front of you, the system can’t for instance, read the signs that are on the side of the road. You will also notice that the Clarity system can look further than the LIDAR and be able to see that there are traffic lights down the street.

This however only rids LIDAR’s use at lower autonomy levels. In order to achieve level four or level five self-driving robo-taxis, a combination of Clarities software alongside LIDAR and Radar will be needed.

Clarity vs Tesla Autopilot

Going against Tesla Autopilot is however a different story. The EV giant rejected using LIDAR early in its development and instead went with using cameras and Radar as their main sensors. However, the forward-facing cameras on Tesla’s are not spaced apart like Light’s system is, lacks the software necessary, and instead has a monocular view. Therein is where Light believes that they have the advantage over Autopilot.

Light writes the following on their website about the shortcomings of a monocular camera system:

Monocular vision systems often utilize AI inferencing to segment, detect, and track objects, as well as determine scene depth. But they are only as good as the data used to train the AI models, and are often used in-conjunction with other sensor modalities or HD maps to improve accuracy, precision, and reliability. Despite such efforts, “long tail” edge and corner cases remain unresolved for many commercial applications relying on monocular perception.

Dave Grannan showed us an example of the imperfect system that even though has had billions of miles worth of data inputted, can still make some silly mistakes. Bellow you’ll see Tesla’s inability to distinguish between a drawing of a human and an actual human simply due to their lack of 3D recognition.

Car kept jamming on the brakes thinking this was a person 🤦♂️ The NN was dreaming @greentheonly pic.twitter.com/ARQn23oXLl

— Electric Future 🇦🇺 🚗⚡☀️ (@electricfuture5) September 26, 2020

With the benefit of having a binocular view, Light claims that Clarity has a much better grasp on the depth of an area, allowing for more precise measurements without the need for extensive machine learning. The system however is not completely free of machine learning when talking about level four or five autonomous driving. In those cases a trained AI will need to be able to recognize and tag things such as street signs and traffic light. However, in lower levels of autonomy, Light claims that they don’t need to train any sort of neural net to function properly.

“Obviously we feel that cameras can solve autonomous needs, that’s our approach. I think Tesla’s challenge I think is going to be, because it is a largely machine learning based system, how do they ultimately cover all the longtail edge cases that may come about? There are just millions of millions of edge cases and when you think about how many millions of pieces of data you need to train on for each of those, it looks mathematically pretty challenging. I know they’re trying to augment, it appears they are through using things like structure for motion, but I think even their system would be improved by incorporating something like Clarity.”

Like Tesla, Light will be able to continuously improve upon the system through over-the-air software updates.

In regards to a comparison between Clarity’s depth perception based system and a monocular system driven by machine learning like Tesla’s, Dave Grannan said the following:

“I will be bold or maybe provocative and say yea, I don’t think as an industry we can ever get to true level five autonomous driving at large scale, meaning getting beyond the range of geofenced areas and things like that without Clarity. What’s missing is a real-time understanding of the 3D structures in the field of view. Without that we’re always going to have the problem… of systems seeing a drawing of a man on the back of a bus and thinking it’s a real man. Or, far more dangerously maybe somebody in a city scene is unloading a truck… and that person being misidentified as a picture on the back of a truck”

Outlook

Like any emerging technologies, it is still too soon to make any sort of concrete judgments. There are still no clear winners in the autonomous vehicle sector and Light has yet to put this technology to real world testing. Presently they have only gone as far as gathering image data and creating the software necessary.

Like the other systems listed, Clarity is more than itself. Most autonomous cars use a combination of systems in order to fit their autonomous needs. Even monocular and AI driven systems like Tesla’s use Radar as both an addition and precaution. As such, Clarity is not a stand alone solution. While it’s said to be able to achieve low level autonomy on a simple two camera system, higher levels and features will require additional equipment.

“For true level 4/level 5 autonomy, we would envision a Light Clarity solution that is 360 degrees around the vehicle, LIDAR covering the same range, and then radar probably in the forward-facing direction…”

It’s still anyone’s game however an unsuspecting innovation might be all it takes to unlock the door to robo-taxis. Is Light’s Clarity platform the last piece of the puzzle? We don’t know. The technology shown to us however is very interesting and for lack of better words, just makes sense. But Clarity is more than level five fitting the needs of every level in between with a massive cost advantage.

“So our solution will range based on the use case, like we talked about, whether its L2/L3 kind of applications or more autonomous L4/L5. For a forward-facing three-camera solution we anticipate the price to the OEM will be around $150. Now, going to a complete 360-degree coverage of the vehicle, for a full autonomous solution will be closer to $1,000.”

Light is promising some important partnerships to help with the testing and development of the product, which have yet to be revealed. Given their high profile partnerships and investors in the past, we are curious to see who is going to provide the testbed for their system. The company simply looks to provide the hardware and software attached to Clarity leaving the automakers to fulfill the rest.

Light hopes to put Clarity in a vehicle next year with it available as a consumer option by 2023.